Bye-bye, #badbots!

We are absolutely thrilled to announce the public release of BotGuard — that protects your site against hacking and malicious bot intrusion!

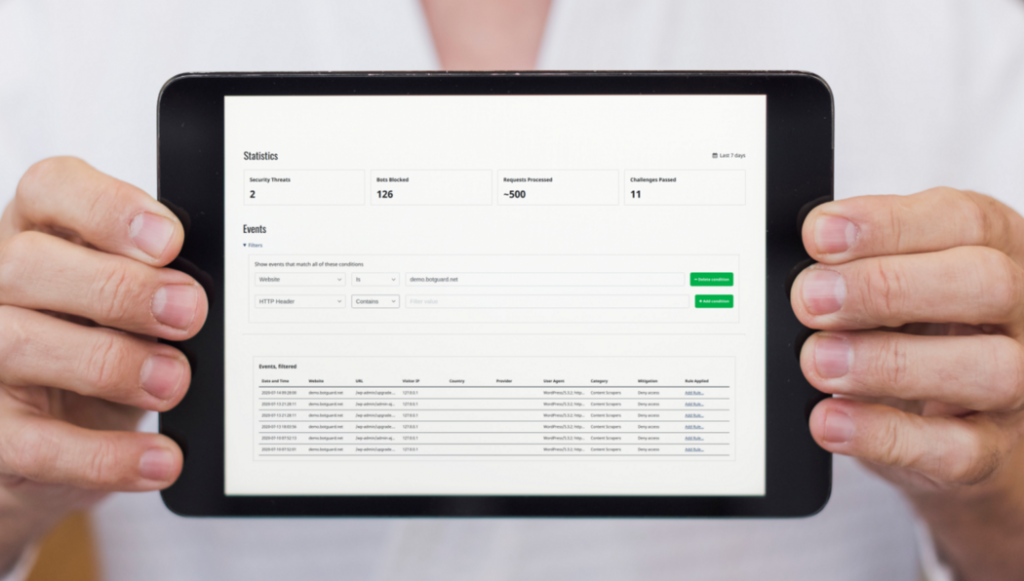

Most importantly, we have implemented a new classification of visitors. From today, the BotGuard system has the ability to distinguish between humans, search engines, social networks, widely known cloud services, content scrapers, bots emulating humans, and various suspicious user activity.

Using BotGuard’s new and powerful rule editor, you can now customize your web protection to suit the desired traffic for your site, for example, you can choose how to process the traffic of each of the categories above.

At the heart of all this is our state-of-the-art, high-performance system that analyzes each user request in real-time against a database of millions of digital fingerprints that indicate malicious activity.

If you are new to BotGuard, simply sign-up, here.

If you are a BotGuard beta user: first, we want to thank you for giving us a chance and the feedback all of you have provided to us! Second, please be sure to update your integration code and rules to take advantage of our new and improved blocking rule settings.

You may need to update the integration module for Apache or NGINX, depending on which web server you use. Please also review the rule editor, as some of our classifications may have changed. This is to make sure that some bots that you think that they are blocked, are in fact not blocked, not to mention that some useful bots may be blocked — although that is very unlikely.

As always, our research team continuously monitors web traffic for new threats, and updates our system to protect you against the most recent potential attacks.

If you have ideas or questions, write to me at nik@botguard.net.

Comments

Leave a Comment